Welcome to ZhiJian!¶

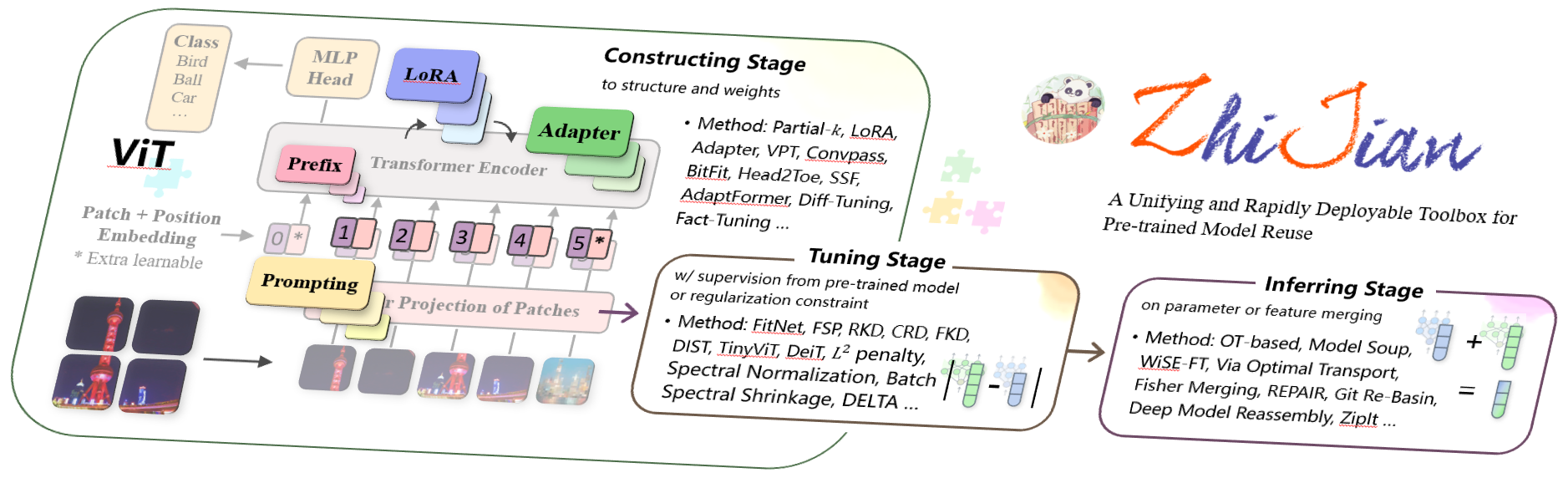

ZhiJian (执简驭繁) is a comprehensive and user-friendly PyTorch-based toolbox for leveraging foundation pre-trained models and their fine-tuned counterparts to extract knowledge and expedite learning in real-world tasks, i.e., serving the Model Reuse tasks.

The rapid progress in deep learning has led to the emergence of numerous open-source Pre-Trained Models (PTMs) on platforms like PyTorch, TensorFlow, and HuggingFace Transformers. Leveraging these PTMs for specific tasks empowers them to handle objectives effectively, creating valuable resources for the machine-learning community. Reusing PTMs is vital in enhancing target models’ capabilities and efficiency, achieved through adapting the architecture, customizing learning on target data, or devising optimized inference strategies to leverage PTM knowledge. To facilitate a holistic consideration of various model reuse strategies, ZhiJian categorizes model reuse methods into three sequential modules: Architect, Tuner, and Merger, aligning with the stages of model preparation, model learning, and model inference on the target task, respectively. The provided interface methods include:

A rchitect Module

The Architect module involves modifying the pre-trained model to fit the target task, and reusing certain parts of the pre-trained model while introducing new learnable parameters with specialized structures.

Linear Probing & Partial-k, How transferable are features in deep neural networks? In: NeurIPS’14. [Paper]

Adapter, Parameter-Efficient Transfer Learning for NLP. In: ICML’19. [Paper]

Diff Pruning, Parameter-Efficient Transfer Learning with Diff Pruning. In: ACL’21. [Paper]

LoRA, LoRA: Low-Rank Adaptation of Large Language Models. In: ICLR’22. [Paper]

Visual Prompt Tuning / Prefix, Visual Prompt Tuning. In: ECCV’22. [Paper]

Head2Toe, Head2Toe: Utilizing Intermediate Representations for Better Transfer Learning. In:ICML’22. [Paper]

Scaling & Shifting, Scaling & Shifting Your Features: A New Baseline for Efficient Model Tuning. In: NeurIPS’22. [Paper]

AdaptFormer, AdaptFormer: Adapting Vision Transformers for Scalable Visual Recognition. In: NeurIPS’22. [Paper]

BitFit, BitFit: Simple Parameter-efficient Fine-tuning for Transformer-based Masked Language-models. In: ACL’22. [Paper]

Convpass, Convolutional Bypasses Are Better Vision Transformer Adapters. In: Tech Report 07-2022. [Paper]

Fact-Tuning, FacT: Factor-Tuning for Lightweight Adaptation on Vision Transformer. In: AAAI’23. [Paper]

VQT, Visual Query Tuning: Towards Effective Usage of Intermediate Representations for Parameter and Memory Efficient Transfer Learning. In: CVPR’23. [Paper]

T uner Module

The Tuner module focuses on training the target model with guidance from pre-trained model knowledge to expedite the optimization process, e.g., via adjusting objectives, optimizers, or regularizers.

Knowledge Transfer and Matching, NeC4.5: neural ensemble based C4.5. In: IEEE Trans. Knowl. Data Eng. 2004. [Paper]

FitNet, FitNets: Hints for Thin Deep Nets. In: ICLR’15. [Paper]

LwF, Learning without Forgetting. In: ECCV’16. [Paper]

FSP, A Gift from Knowledge Distillation: Fast Optimization, Network Minimization and Transfer Learning. In: CVPR’17. [Paper]

NST, Like What You Like: Knowledge Distill via Neuron Selectivity Transfer. In: CVPR’17. [Paper]

RKD, Relational Knowledge Distillation. In: CVPR’19. [Paper]

SPKD, Similarity-Preserving Knowledge Distillation. In: CVPR’19. [Paper]

CRD, Contrastive Representation Distillation. In: ICLR’20. [Paper]

REFILLED, Distilling Cross-Task Knowledge via Relationship Matching. In: CVPR’20. [Paper]

WiSE-FT, Robust fine-tuning of zero-shot models. In: CVPR’22. [Paper]

L2 penalty / L2 SP, Explicit Inductive Bias for Transfer Learning with Convolutional Networks. In:ICML’18. [Paper]

Spectral Norm, Spectral Normalization for Generative Adversarial Networks. In: ICLR’18. [Paper]

BSS, Catastrophic Forgetting Meets Negative Transfer:Batch Spectral Shrinkage for Safe Transfer Learning. In: NeurIPS’19.. [Paper]

DELTA, DELTA: DEep Learning Transfer using Feature Map with Attention for Convolutional Networks. In: ICLR’19. [Paper]

DeiT, Training data-efficient image transformers & distillation through attention. In ICML’21. [Paper]

DIST, Knowledge Distillation from A Stronger Teacher. In: NeurIPS’22. [Paper]

M erger Module

The Merger module influences the inference phase by either reusing pre-trained features or incorporating adapted logits from the pre-trained model.

Logits Ensemble, Ensemble Methods: Foundations and Algorithms. 2012. [Book]

Nearest Class Mean, Distance-Based Image Classification: Generalizing to New Classes at Near-Zero Cost. In: IEEE Trans. Pattern Anal. Mach. Intell. 2013. [Paper]

SimpleShot, SimpleShot: Revisiting Nearest-Neighbor Classification for Few-Shot Learning. In: CVPR’19. [Paper]

via Optimal Transport, Model Fusion via Optimal Transport. In: NeurIPS’20. [Paper]

Model Soup, Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. In: ICML’22. [Paper]

Fisher Merging, Merging Models with Fisher-Weighted Averaging. In: NeurIPS’22. [Paper]

Deep Model Reassembly, Deep Model Reassembly. In: NeurIPS’22. [Paper]

REPAIR, REPAIR: REnormalizing Permuted Activations for Interpolation Repair. In: ICLR’23. [Paper]

Git Re-Basin, Git Re-Basin: Merging Models modulo Permutation Symmetries. In: ICLR’23. [Paper]

ZipIt, ZipIt! Merging Models from Different Tasks without Training. [Paper]

💡 ZhiJian also has the following highlights:

Support reuse of various pre-trained model zoo, including:

PyTorch Torchvision; OpenAI CLIP; 🤗Hugging Face PyTorch Image Models (timm), Transformers

Other popular projects, e.g., vit-pytorch (stars 14k)

Extremely easy to get started and customize

Get started with a 10 minute blitz [Open In Colab]

Customize datasets and pre-trained models with step-by-step instructions [Open In Colab]

Feel free to create a novel approach for reusing pre-trained model [Open In Colab]

Concise things do big

Only ~5000 lines of the base code, with incorporating method like building LEGO blocks

State-of-the-art results on VTAB benchmark with approximately 10k experiments [here]

Support friendly guideline and comprehensive documentation to custom dataset and pre-trained model [here]

🔥 The Naming of ZhiJian: In Chinese “ZhiJian-YuFan” means handling complexity with concise and efficient methods. Given the variations in pre-trained models and the deployment overhead of full parameter fine-tuning, ZhiJian represents a solution that is easily reusable, maintains high accuracy, and maximizes the potential of pre-trained models. “执简驭繁”的意思是用简洁高效的方法驾驭纷繁复杂的事物。“繁”表示现有预训练模型和复用方法种类多、差异大、部署难,所以取名”执简”的意思是通过该工具包,能轻松地驾驭模型复用方法,易上手、快复用、稳精度,最大限度地唤醒预训练模型的知识。

🕹️ Quick Start¶

An environment with Python 3.7+ from conda, venv, or virtualenv.

Install ZhiJian using pip:

$ pip install zhijian

For more details please click installation instructions.

[Option] Install with the newest version through GitHub:

$ pip install git+https://github.com/zhangyikaii/lamda-zhijian.git@main --upgrade

Open your python console and type:

import zhijian print(zhijian.__version__)

If no error occurs, you have successfully installed ZhiJian.

📚 Documentation¶

The tutorials and API documentation are hosted on zhijian.readthedocs.io

中文文档位于 zhijian.readthedocs.io/zh

Why ZhiJian?¶

Related Library |

Stars |

# of Alg. |

# of Model |

# of Dataset |

# of Fields |

LLM Supp. |

Docs. |

|---|---|---|---|---|---|---|---|

8k+ |

6 |

~15 |

–(3) |

1 (a) |

✔️ |

✔️ |

|

1k+ |

10 |

~15 |

–(3) |

1 (a) |

❌ |

✔️ |

|

2k+ |

4 |

5 |

~20 |

1 (a) |

✔️ |

❌ |

|

1k+ |

20 |

2 |

2 |

1 (b) |

❌ |

❌ |

|

608 |

10 |

3 |

2 |

1 (c) |

❌ |

❌ |

|

255 |

3 |

3 |

5 |

1 (d) |

❌ |

❌ |

|

410 |

3 |

5 |

4 |

1 (d) |

❌ |

❌ |

|

ZhiJian (Ours) |

ing |

30+ |

~50 |

19 |

1 (a,b,c,d) |

✔️ |

✔️ |

Tutorials

API Docs

Community